|

Howdy! I'm a Postdoctoral Associate in Department of Computer Science, Rice University, working with Prof. Xia "Ben" Hu and Prof. Hanjie Chen, to explore Efficient AI, especially for LLMs, Diffusion Models, and Multimodal LLMs. Prior to joining Rice, I completed my Ph.D. at Rutgers University, advised by Prof. Bo Yuan. In 2024 Spring, I was a Research Intern in Creative Vision team, Snap Research, proposing the 1.99 bits quantization on text-to-image generative model, BitsFusion. In 2022, I was a Research Intern at Media Lab, Tencent America, exploring efficiency and robustness of Learned Image Compression and Transformer models. In 2019, I was a full-time Algorithm Engineer at JD, working on the face verification and recognition. In 2018, I also spent a wonderful time as a Research and Development Intern and a member of PaddlePaddle (20.4k stars now), initializing the deep learning inference framework Paddle-Lite (6.6k stars now) at Baidu, reported in NeurIPS Expo, Baidu Create, Wave Summit+.

In addition to my academic work, I am passionate about Basketball, DOTA/DOTA2, World of Warcraft. I love Tracy McGrady, Stephen Curry, Lionel Messi, PIS (YaphetS). |

|

|

My research is primarily focused on Efficient AI and Trustworthy AI. In the domain of Efficient AI, my investigations revolve around developing techniques to achieve resource-efficient deep learning models without compromising their accuracy or performance. I aim to design and innovate compression methods, such as pruning, quantization, and low-rank approximation, to reduce the size and complexity of deep learning models. By doing so, I intend to facilitate the deployment of these models on resource-constrained devices like mobile phones and embedded systems. In my research on Trustworthy AI, my interests lie in investigating the vulnerability and robustness through adversarial attacks and backdoor attacks. I am dedicated to understanding the vulnerabilities of AI models in the face of these malicious threats and develop defense mechanisms that enhance the robustness AI models against these attacks. Specifically, my research areas include: Technologies:

|

Previous "Efficient Deep Learning Reading Group" Sessions:

|

|

|

(*: Equal Contribution; ‡: Corresponding Author/Project Lead) |

2025 |

|

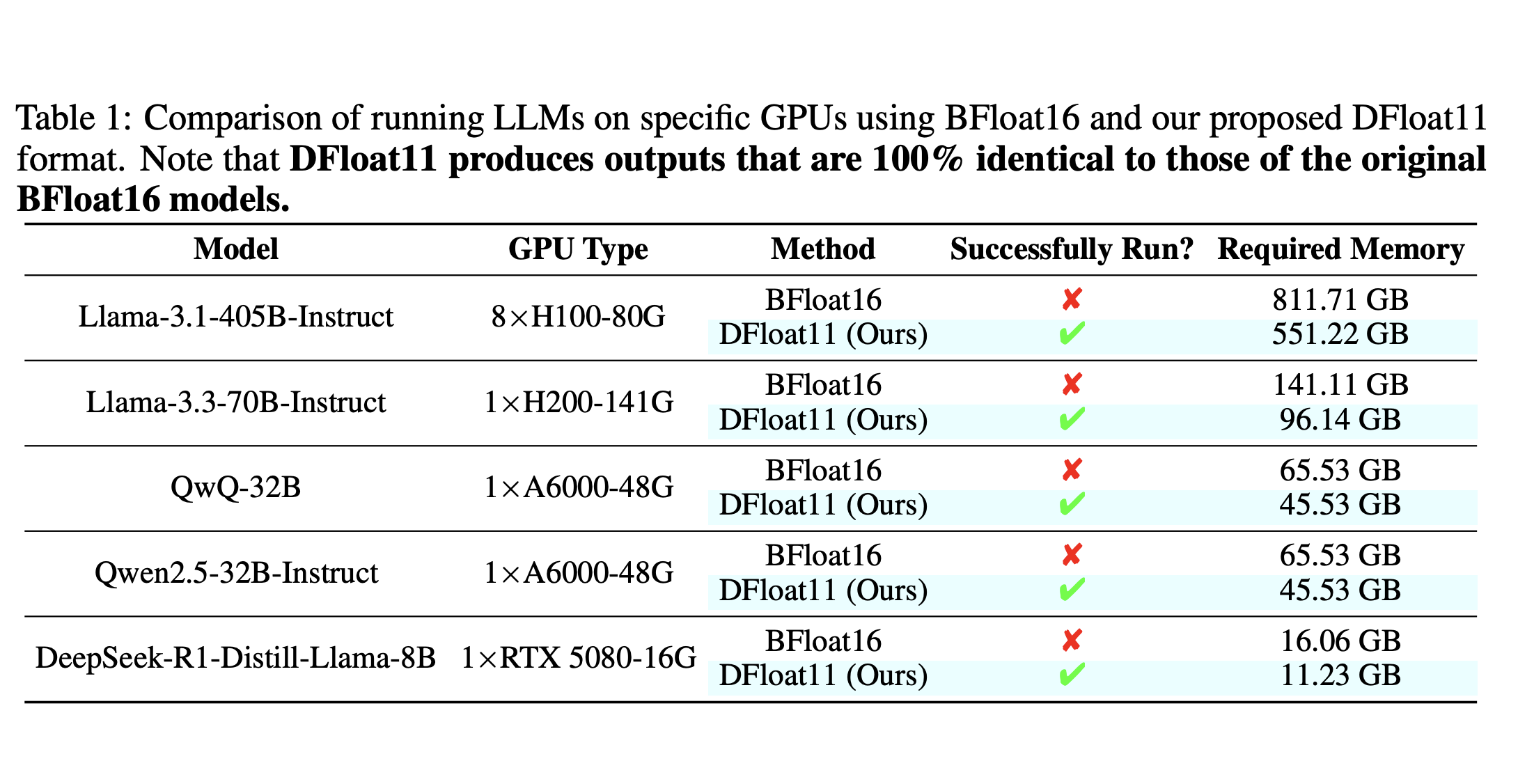

Tianyi Zhang, Yang Sui, Shaochen Zhong, Vipin Chaudhary, Xia Hu, Anshumali Shrivastava [arXiv 2025] GitHub DFloat11 Models in Hugging Face 新智元 机器之心 arXiv |

|

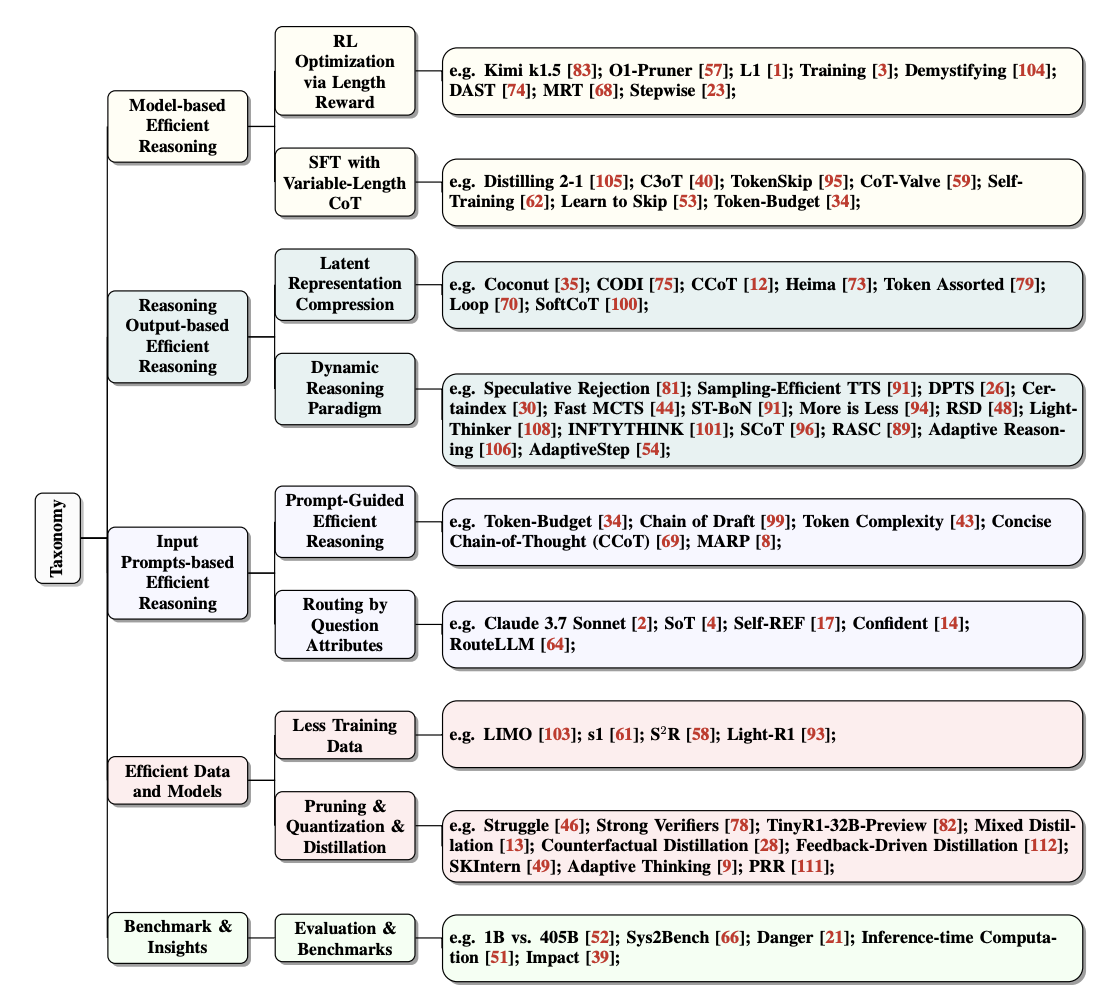

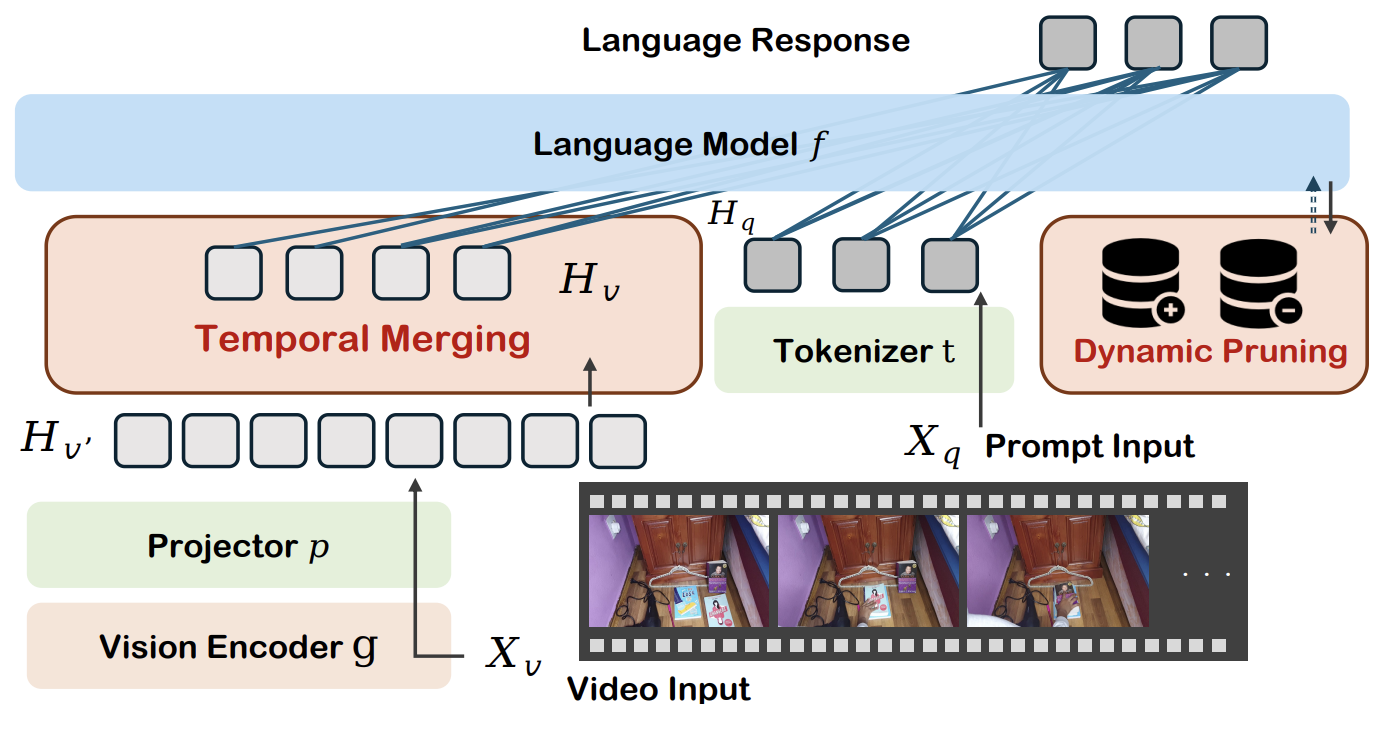

Yang Sui, Yu-Neng Chuang, Guanchu Wang, Jiamu Zhang, Tianyi Zhang, Jiayi Yuan, Hongyi Liu, Andrew Wen, Shaochen Zhong, Hanjie Chen, Xia Hu [arXiv] LinkedIn Recommendation LinkedIn Recommendation X 新智元 Daily Paper in Hugging Face arXiv GitHub |

|

Cheng Yang, Yang Sui‡, Jinqi Xiao, Lingyi Huang, Yu Gong, Chendi Li, Jinghua Yan, Yu Bai, Ponnuswamy Sadayappan, Xia Hu, Bo Yuan [CVPR 2025] The IEEE/CVF Computer Vision and Pattern Recognition Conference arXiv |

|

Yushu Wu, Zhixing Zhang, Yanyu Li, Yanwu Xu, Anil Kag, Yang Sui, Huseyin Coskun, Ke Ma, Aleksei Lebedev, Ju Hu, Dimitris Metaxas, Yanzhi Wang, Sergey Tulyakov, Jian Ren [CVPR 2025] The IEEE / CVF Computer Vision and Pattern Recognition Conference arXiv Project Page |

|

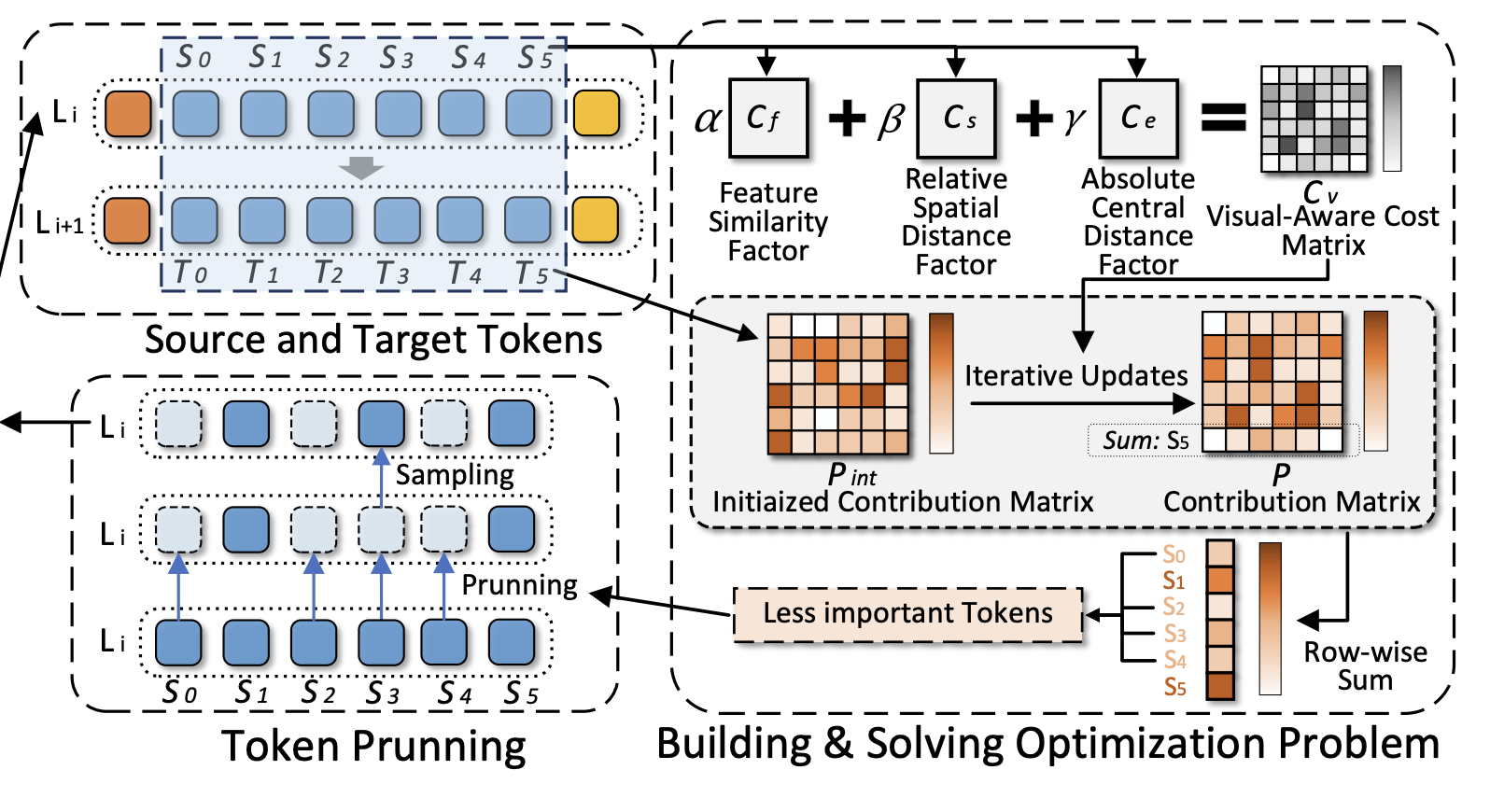

Keda TAO, Can Qin, Haoxuan You, Yang Sui, Huan Wang [CVPR 2025] The IEEE / CVF Computer Vision and Pattern Recognition Conference arXiv GitHub |

|

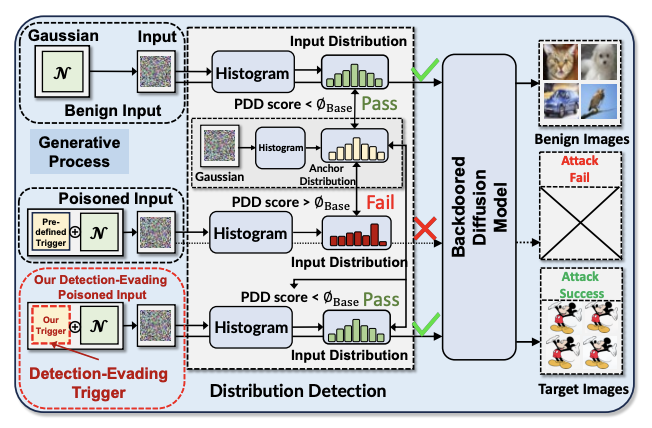

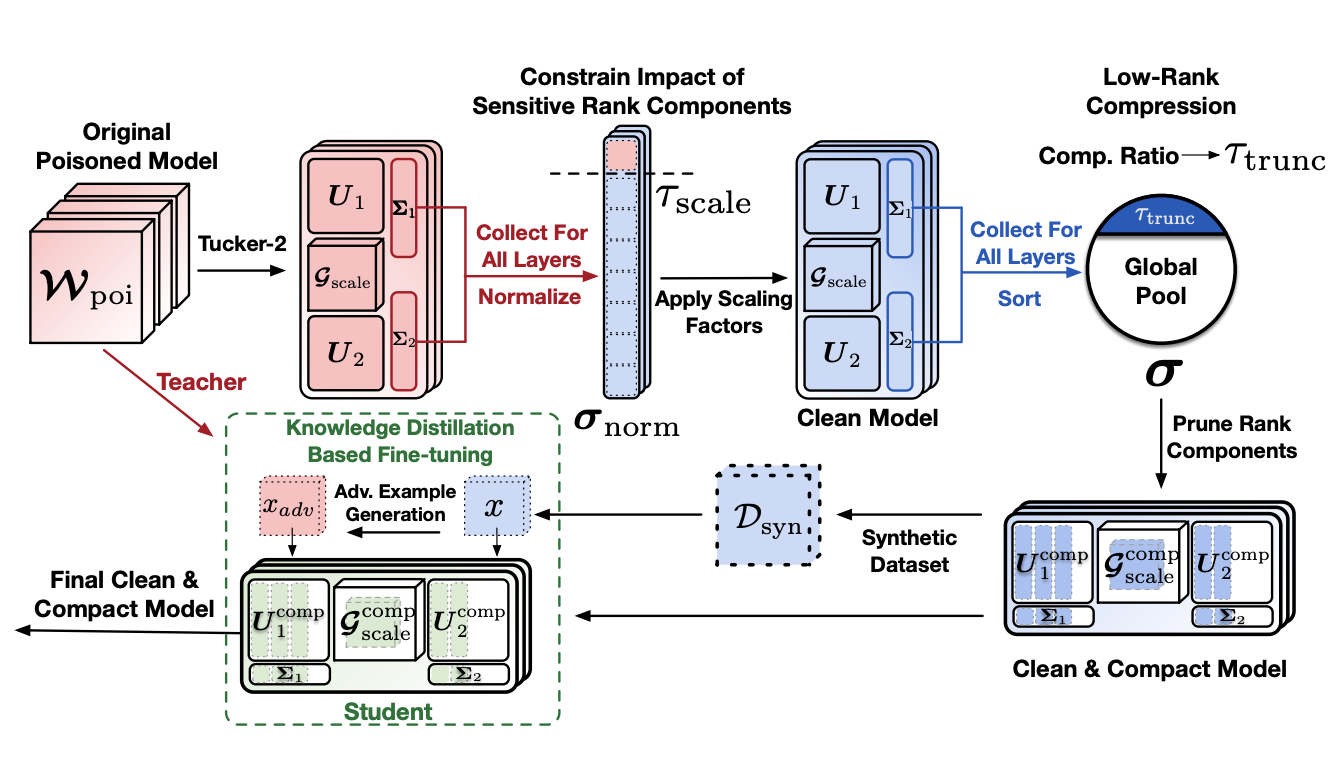

Yang Sui, Huy Phan, Jinqi Xiao, Tianfang Zhang, Zijie Tang, Cong Shi, Yan Wang, Yingying Chen, Bo Yuan [TMLR 2025] Transactions on Machine Learning Research arXiv |

|

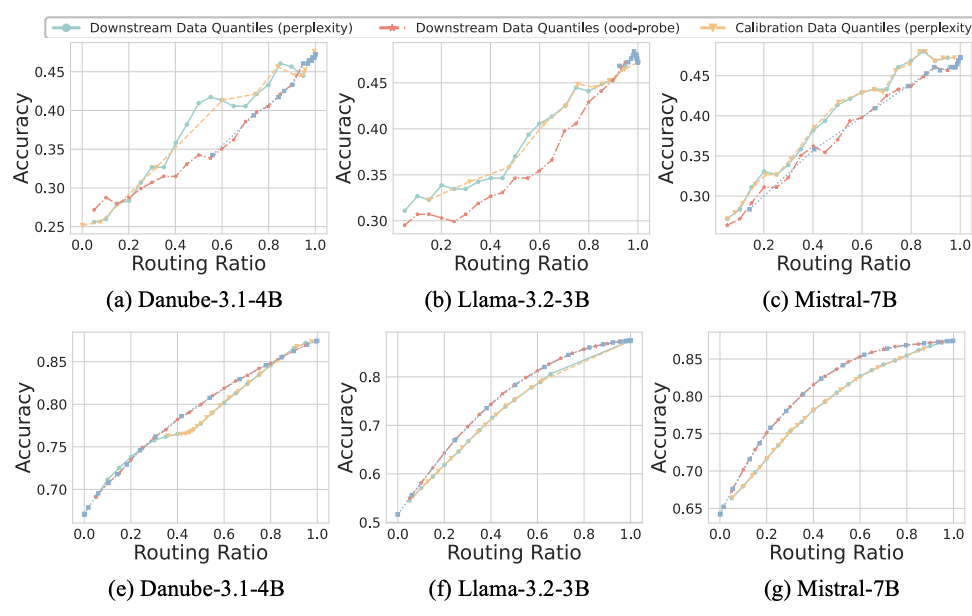

Yu-Neng Chuang, Leisheng Yu, Guanchu Wang, Lizhe Zhang, Zirui Liu, Xuanting Cai, Yang Sui, Vladimir Braverman, Xia Hu [arXiv] arXiv |

2024 |

|

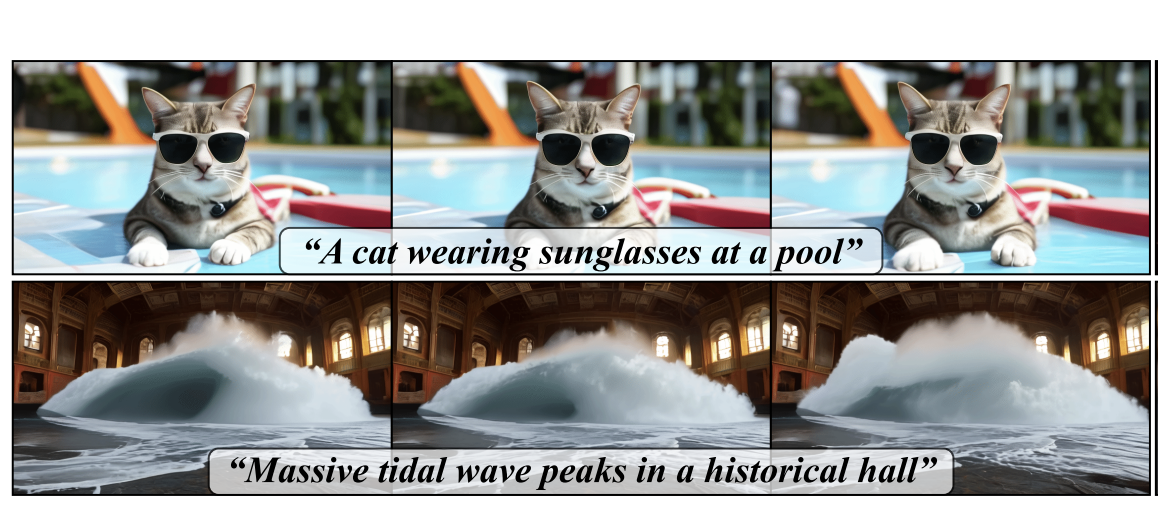

Yang Sui, Yanyu Li, Anil Kag, Yerlan Idelbayev, Junli Cao, Ju Hu, Dhritiman Sagar, Bo Yuan, Sergey Tulyakov, Jian Ren [NeurIPS 2024] The Thirty-eighth Annual Conference on Neural Information Processing Systems Daily Paper in Hugging Face LinkedIn Recommendation Reddit Discussion arXiv Project Page Video |

|

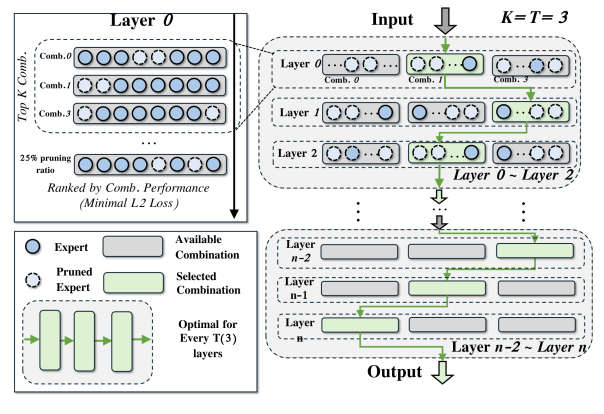

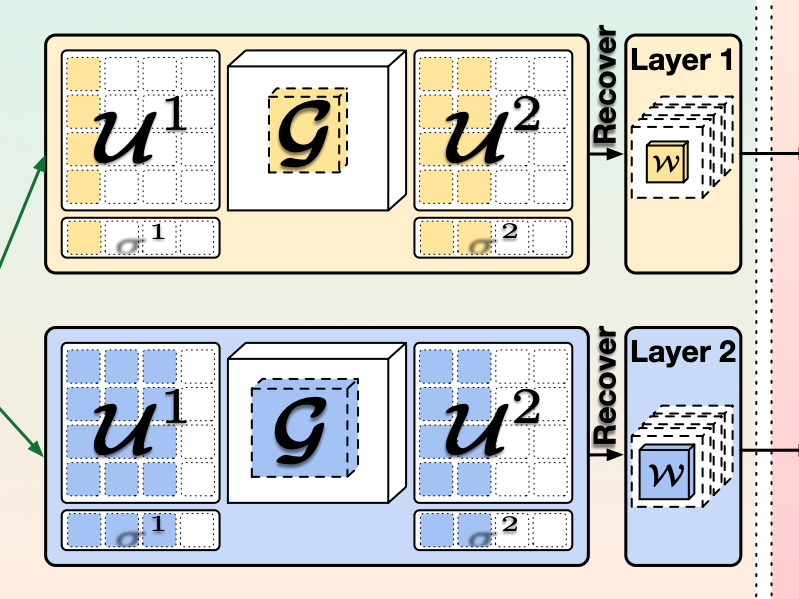

Cheng Yang*, Yang Sui*, Jinqi Xiao, Lingyi Huang, Yu Gong, Yuanlin Duan, Wenqi Jia, Miao Yin, Yu Cheng, Bo Yuan [EMNLP 2024 Findings] The 2024 Conference on Empirical Methods in Natural Language Processing |

|

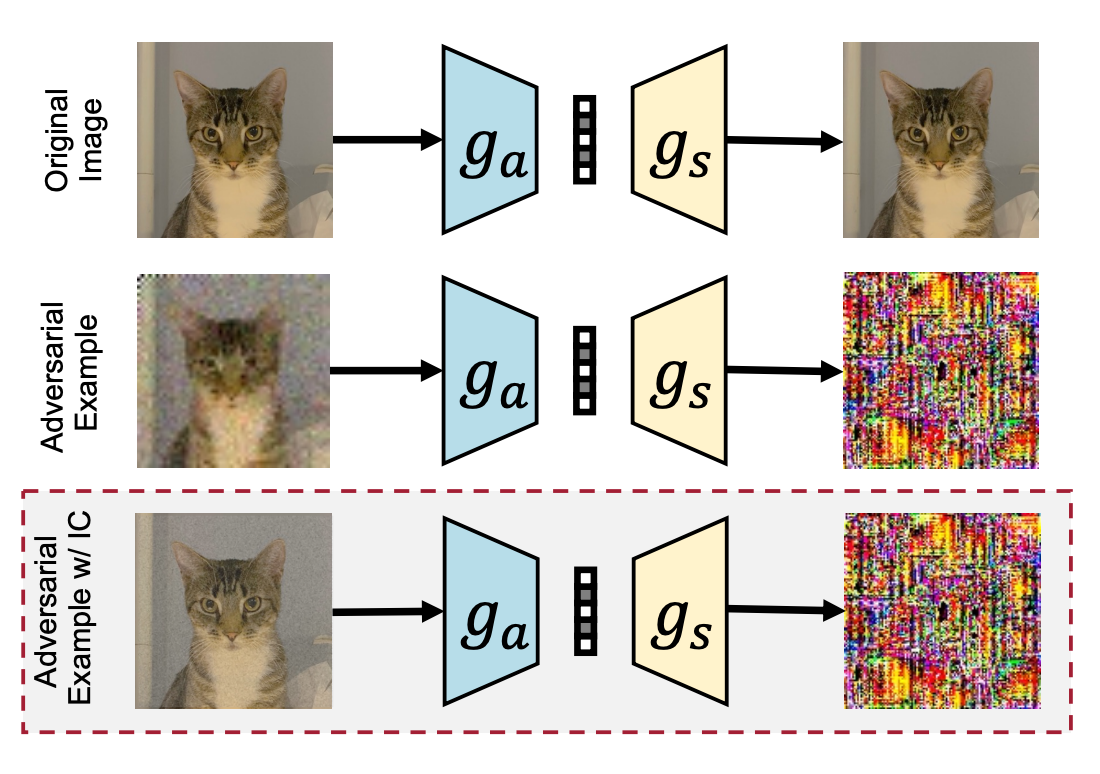

Yang Sui, Zhuohang Li, Ding Ding, Xiang Pan, Xiaozhong Xu, Shan Liu, Zhenzhong Chen [BMVC 2024] The 35th British Machine Vision Conference, 2024 arXiv |

|

Huy Phan, Jinqi Xiao, Yang Sui, Tianfang Zhang, Zijie Tang, Cong Shi, Yan Wang, Yingying Chen, Bo Yuan [ECCV 2024] The 18th European Conference on Computer Vision ECCV 2024 |

|

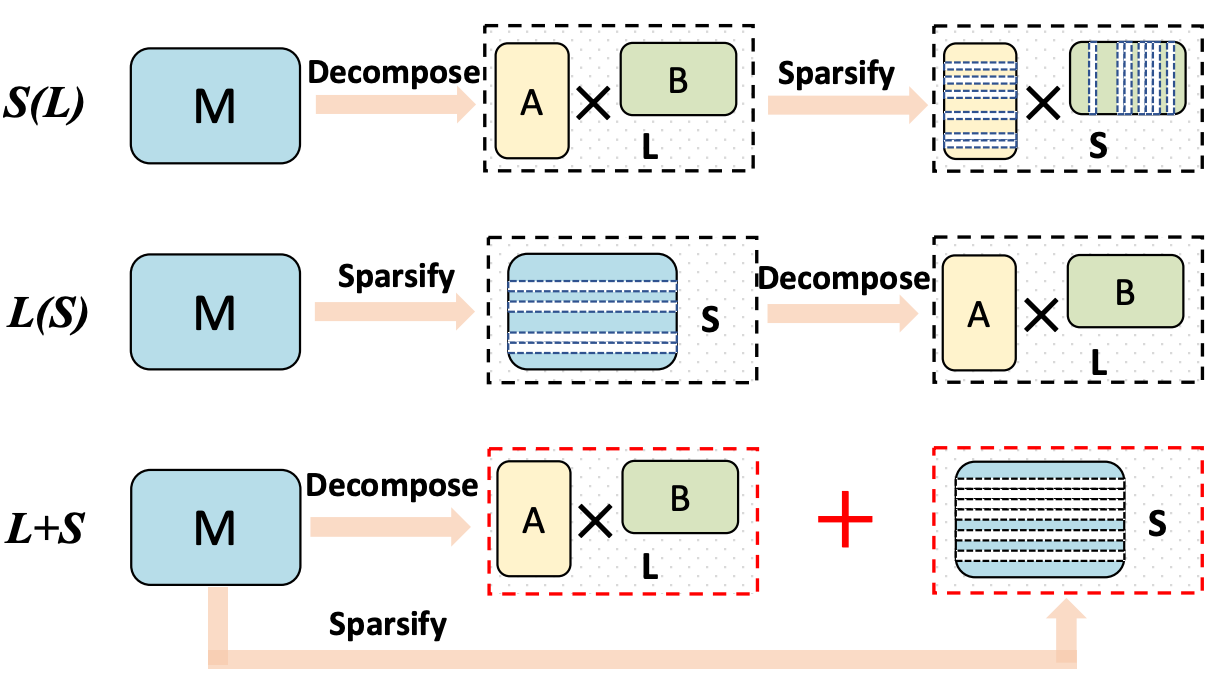

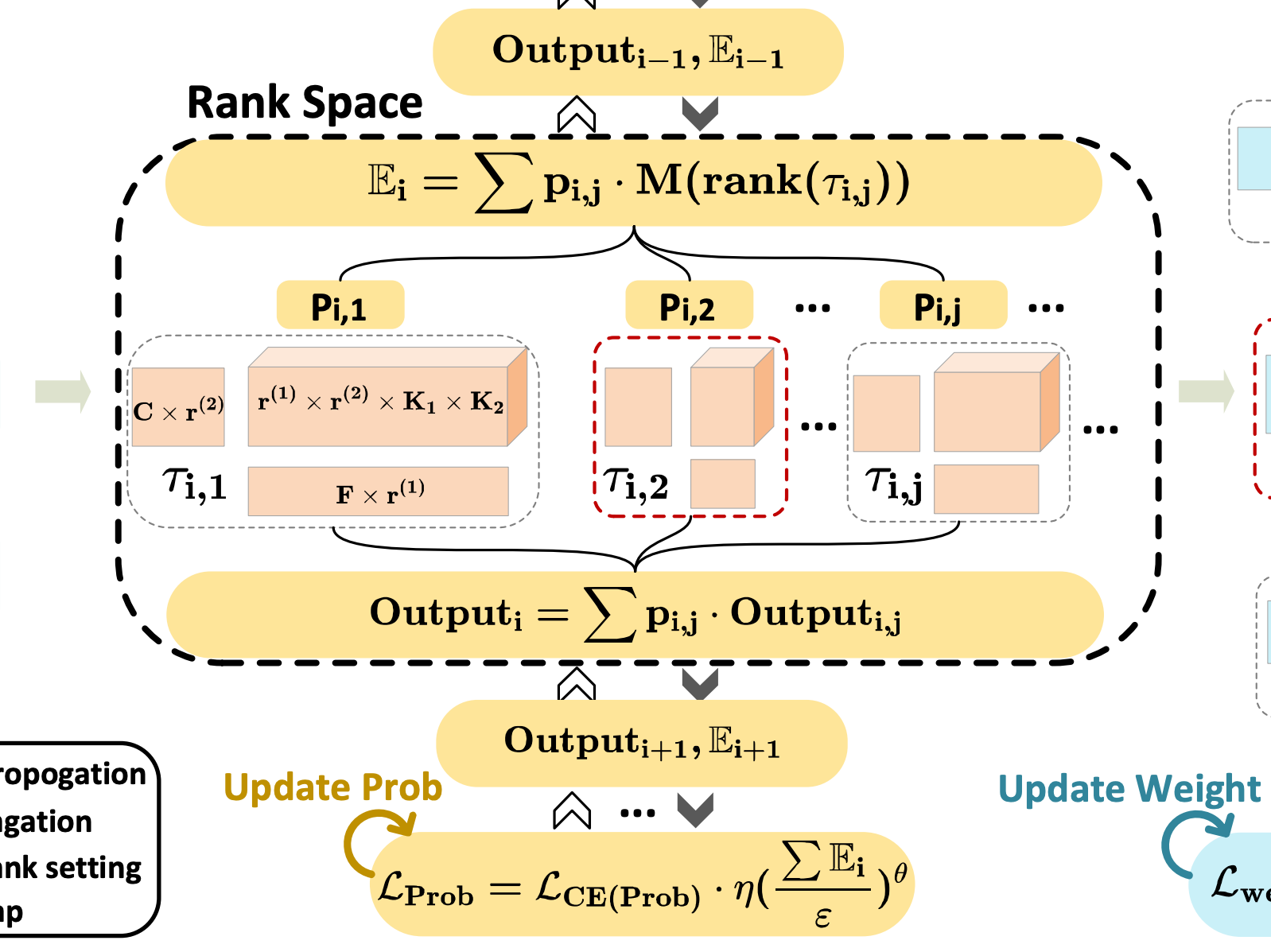

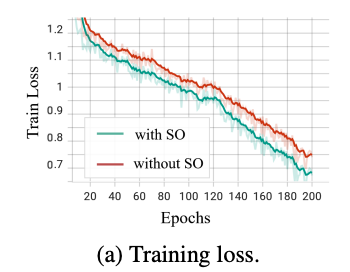

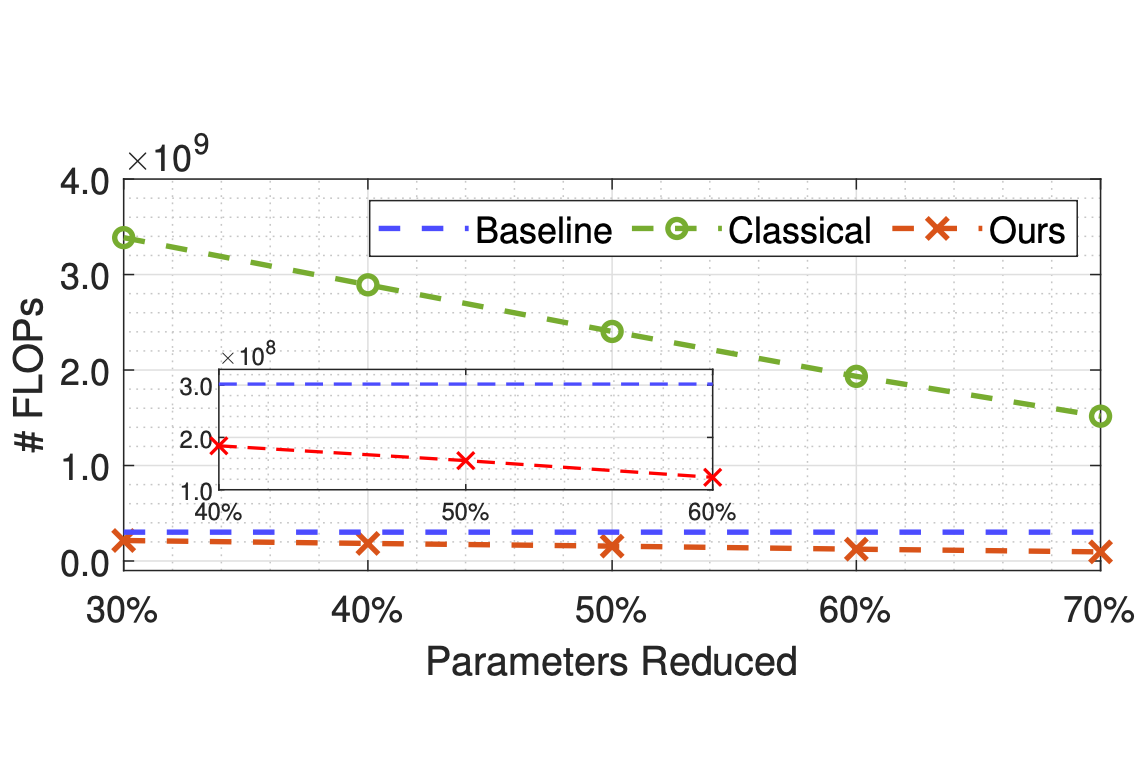

Yang Sui, Miao Yin, Yu Gong, Bo Yuan [TNNLS] IEEE Transactions on Neural Networks and Learning Systems |

|

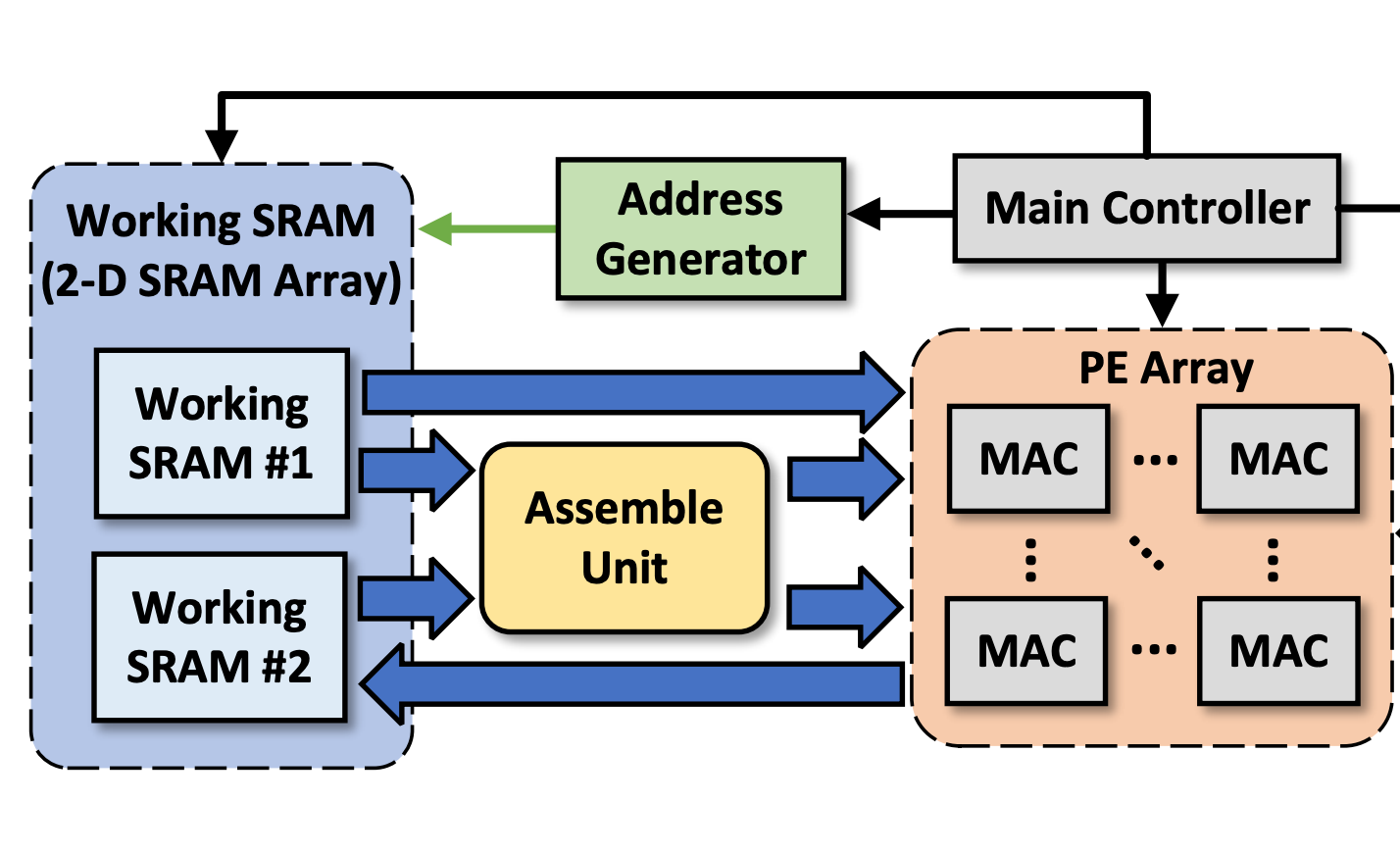

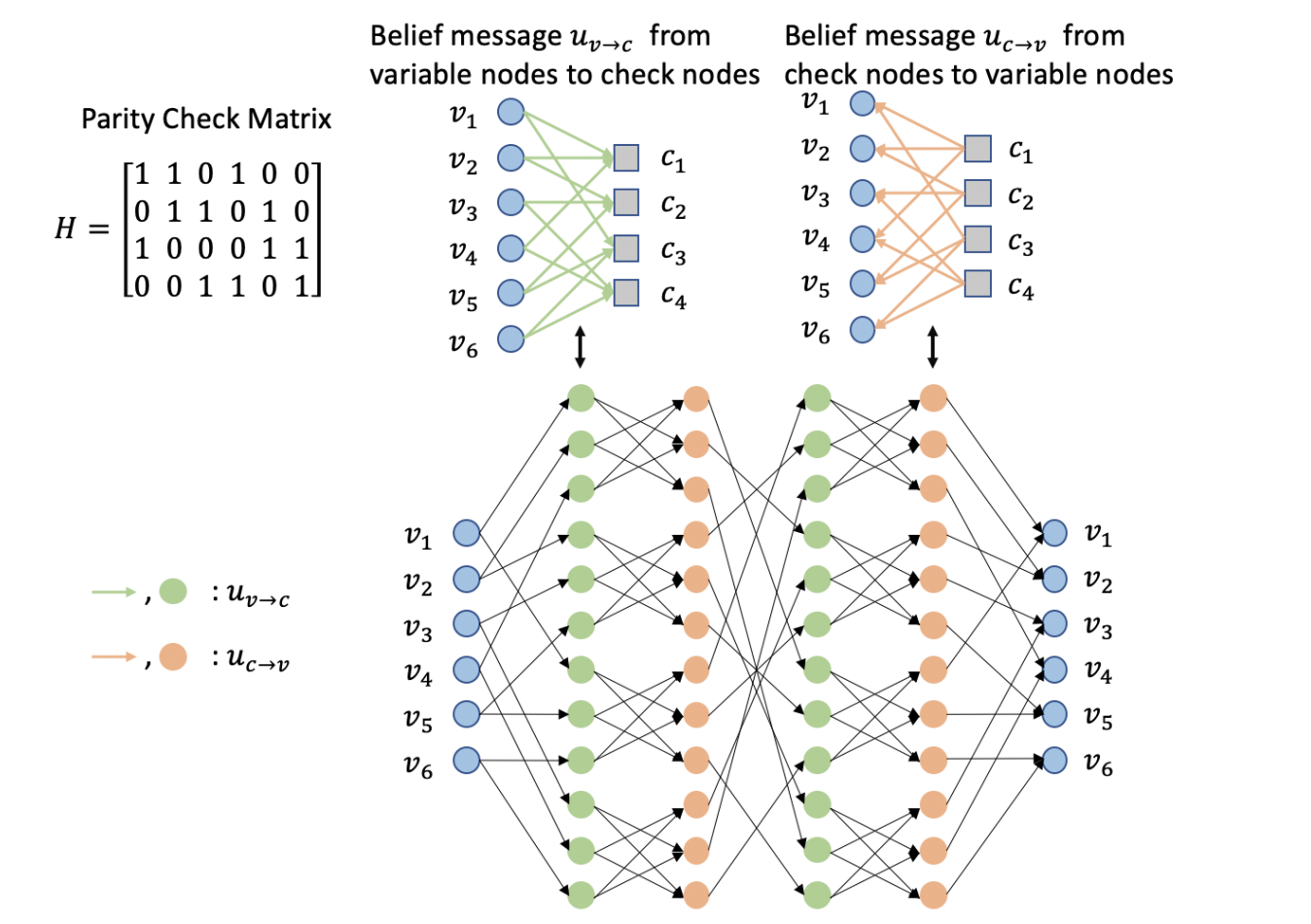

Lingyi Huang, Yu Gong, Yang Sui, Xiao Zang, Bo Yuan [HPCA 2024] In Proceedings of the IEEE International Symposium on High-Performance Computer Architecture, 2024 |

2023 |

|

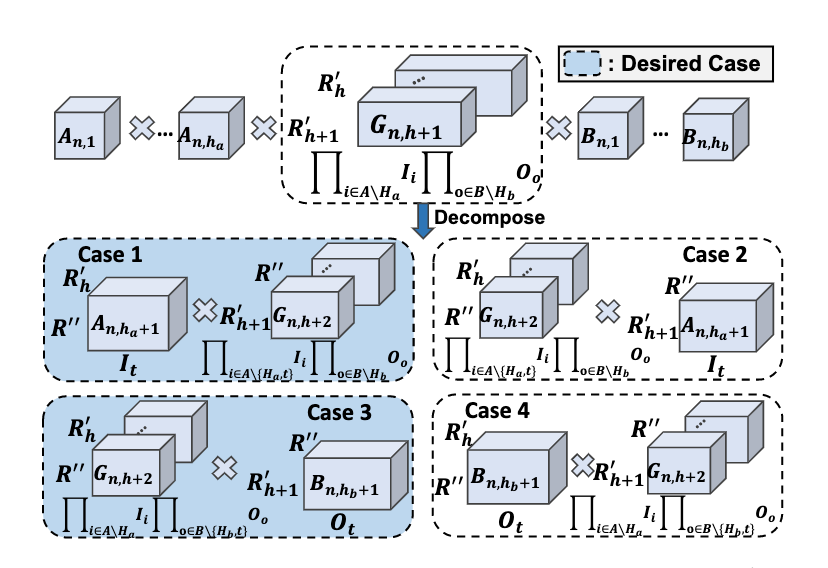

Yang Sui, Minning Zhu, Lingyi Huang, Chung-Tse Michael Wu, Bo Yuan [ICCAD 2023] In Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, 2023 |

|

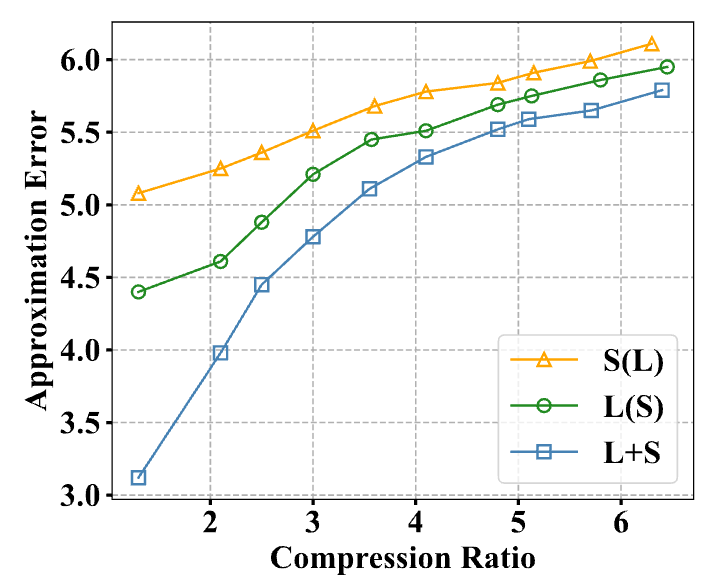

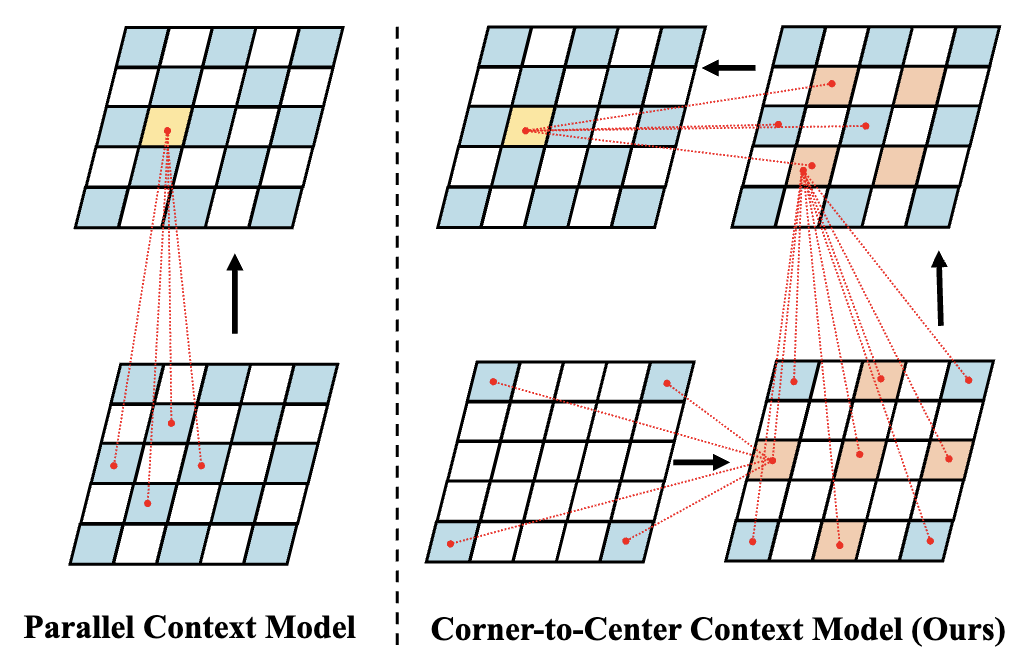

Yang Sui, Ding Ding, Xiang Pan, Xiaozhong Xu, Shan Liu, Bo Yuan, Zhenzhong Chen [JVCI] Journal of Visual Communication and Image Representation |

|

Yang Sui, Zhuohang Li, Ding Ding, Xiang Pan, Xiaozhong Xu, Shan Liu, Zhenzhong Chen [DCC 2024] In Proceedings of the Data Compression Conference, 2024 [NCW@ICML 2023] Neural Compression: From Information Theory to Applications Spotlight presentation Website |

|

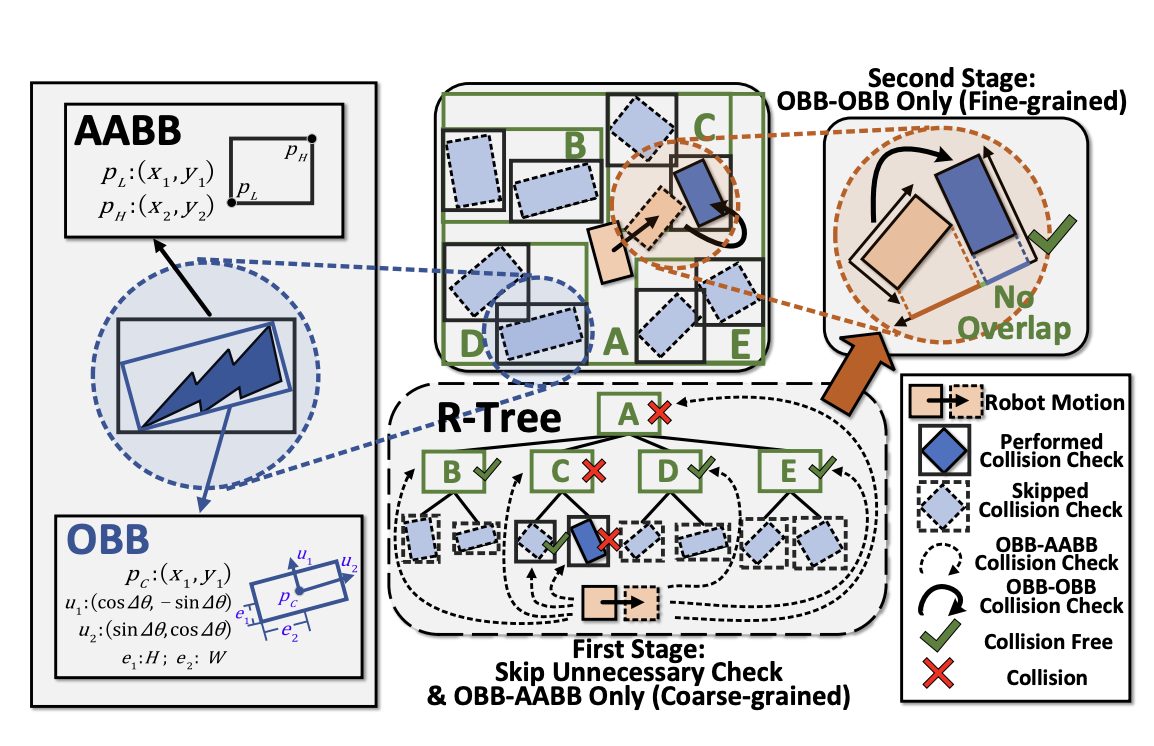

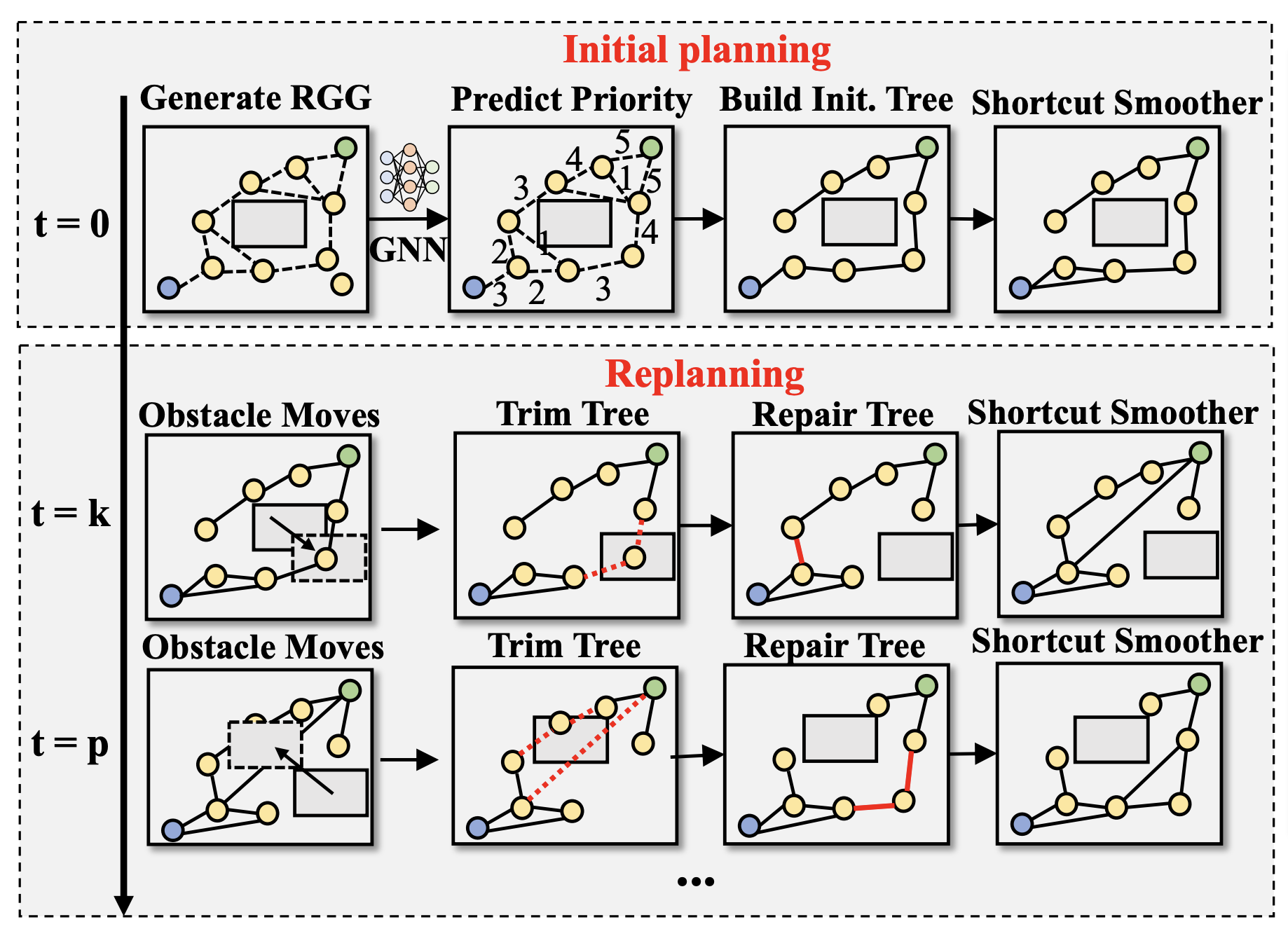

Wenjin Zhang, Xiao Zang, Lingyi Huang, Yang Sui, Jingjin Yu, Yingying Chen, Bo Yuan [IROS 2023] In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 2023 |

|

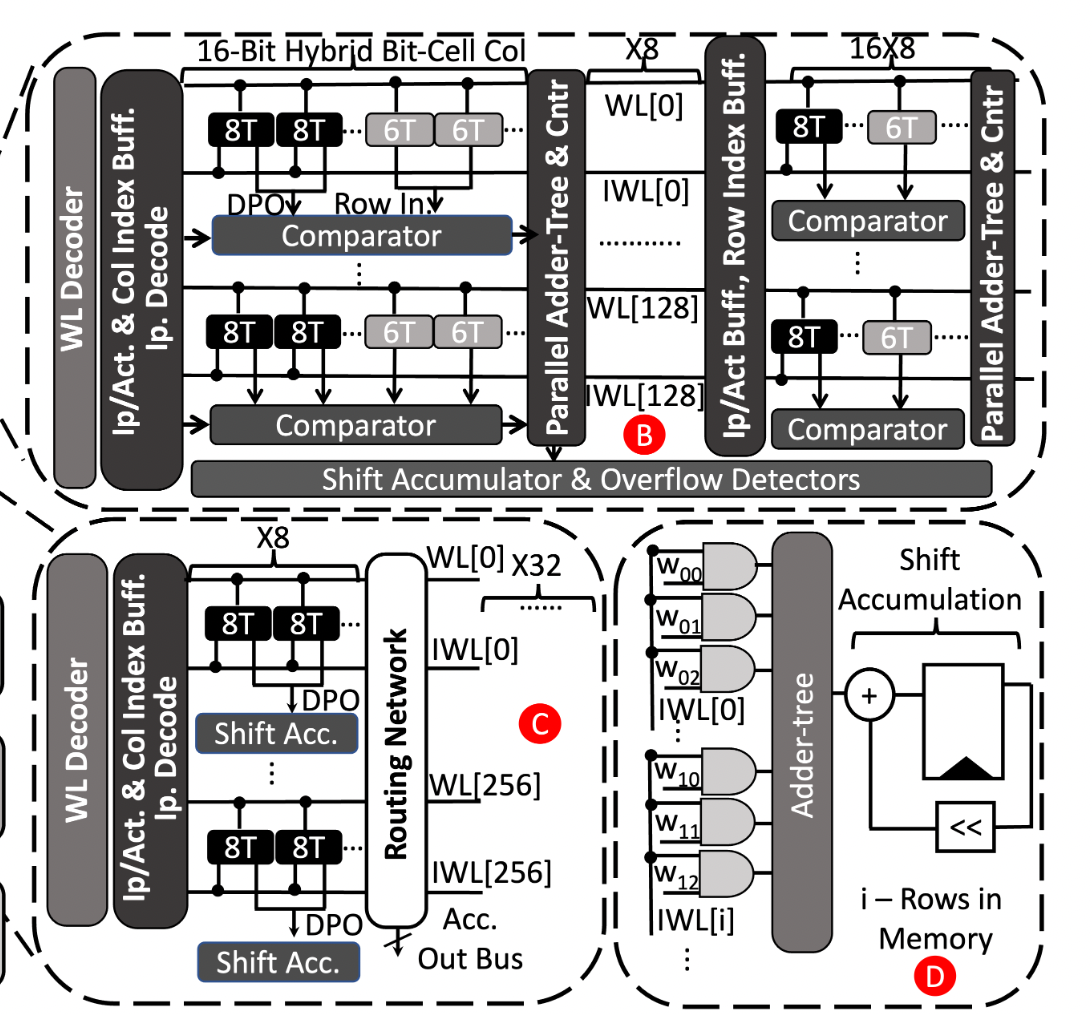

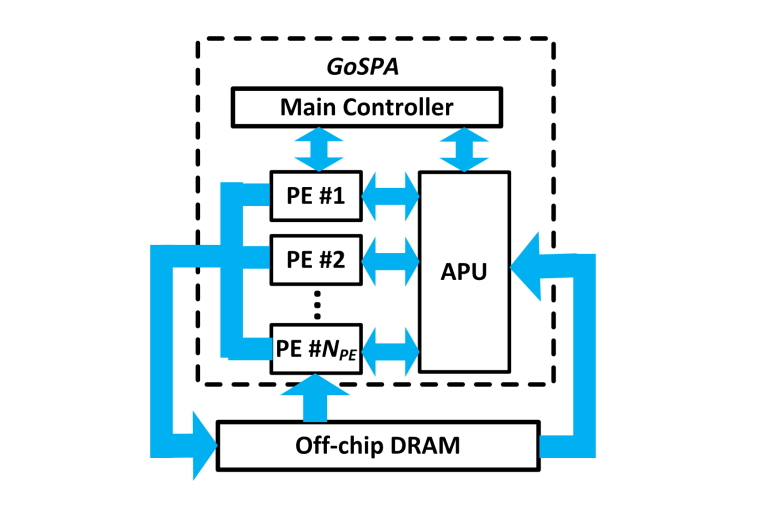

Yu Gong, Miao Yin, Lingyi Huang, Jinqi Xiao, Yang Sui, Chunhua Deng, Bo Yuan [ISCA 2023] In Proceedings of the 50th International Symposium on Computer Architecture, 2023 |

|

Amitesh Sridharan, Fan Zhang, Yang Sui, Bo Yuan, Deliang Fan [DAC 2023] In Proceedings of the 60th ACM/IEEE Design Automation Conference, 2023 |

|

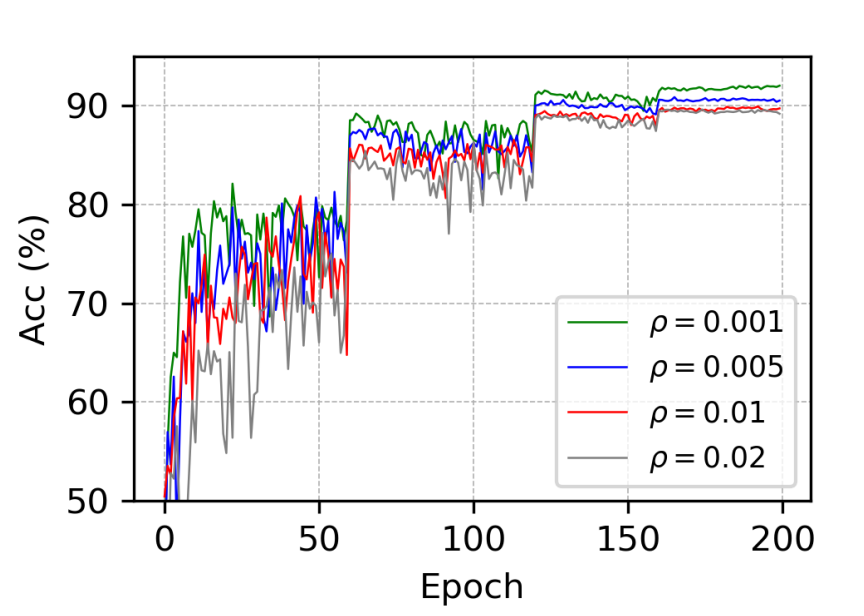

Yang Sui, Wanzhao Yang, Miao Yin, Yu Gong, Bo Yuan [DCAA@AAAI 2023] DCAA, The First Workshop on DL-Hardware Co-Design for AI Acceleration Website Award Best Paper Runner-Up Award |

|

Huy Phan, Miao Yin, Yang Sui, Bo Yuan, Saman Zonouz [AAAI 2023] In Proceedings of the AAAI Conference on Artificial Intelligence, 2023 Oral |

|

Jinqi Xiao, Chengming Zhang, Yu Gong, Miao Yin, Yang Sui, Lizhi Xiang, Dingwen Tao, Bo Yuan [AAAI 2023] In Proceedings of the AAAI Conference on Artificial Intelligence, 2023 Oral |

|

Yang Sui, Miao Yin, Yu Gong, Jinqi Xiao, Huy Phan, Bo Yuan [arXiv] |

2022 |

|

Yu Gong, Miao Yin, Lingyi Huang, Chunhua Deng, Yang Sui, Bo Yuan [arXiv] |

|

Miao Yin, Yang Sui, Wanzhao Yang, Xiao Zang, Yu Gong, Bo Yuan [CVPR 2022] In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022 |

2021 |

|

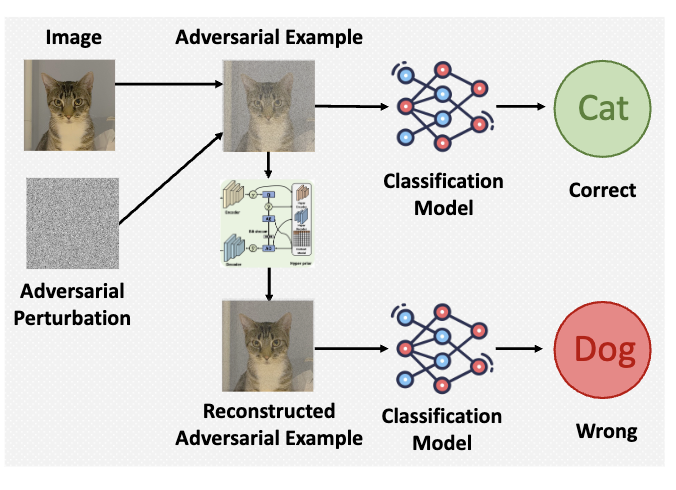

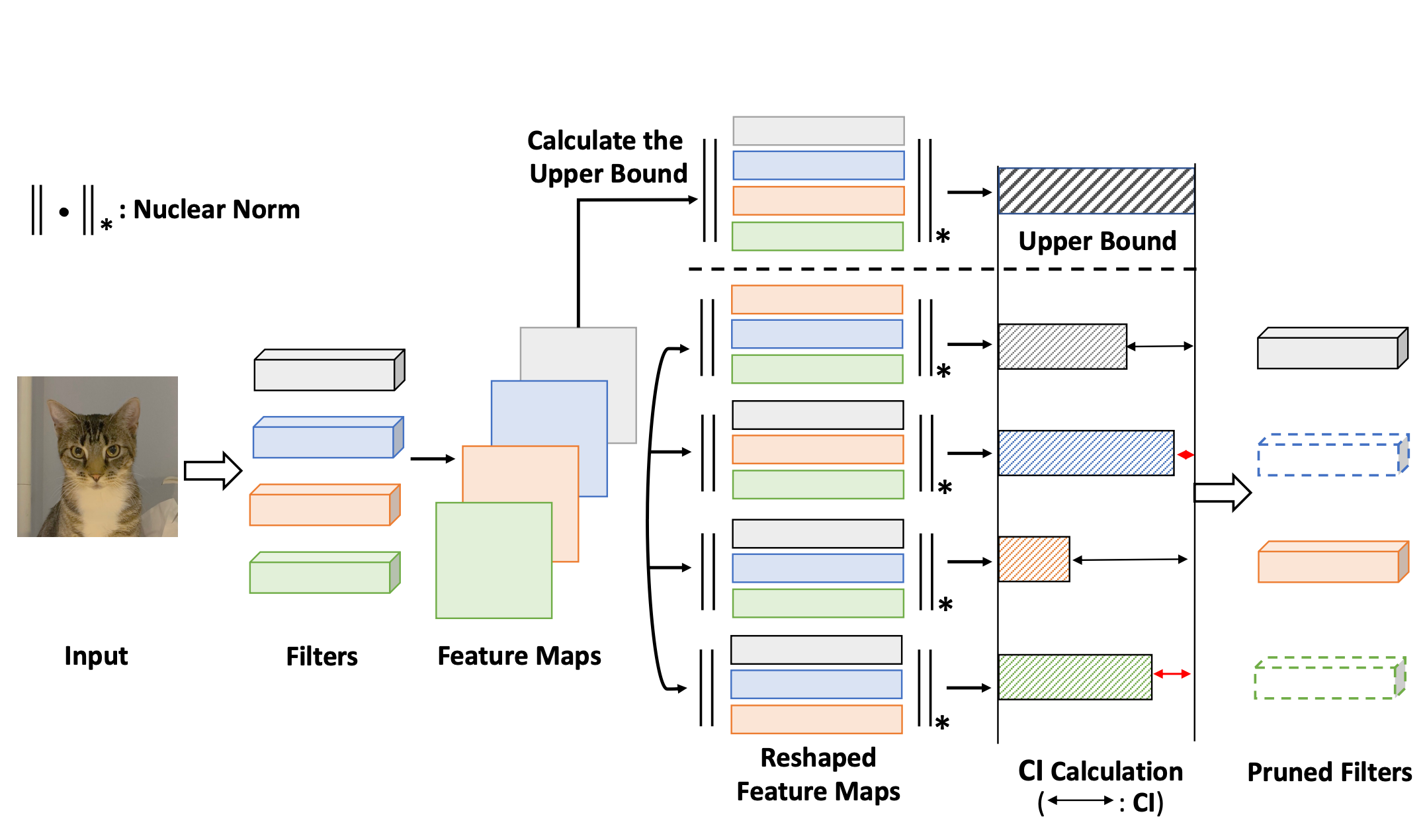

Yang Sui, Miao Yin, Yi Xie, Huy Phan, Saman Zonouz, Bo Yuan [NeurIPS 2021] Advances in Neural Information Processing Systems 34, 2021 |

|

Boyang Zhang*, Yang Sui*, Lingyi Huang, Siyu Liao, Chunhua Deng, Bo Yuan [ICCAD 2021] In Proceedings of the IEEE/ACM International Conference On Computer Aided Design, 2021 |

|

Chunhua Deng, Yang Sui, Siyu Liao, Xuehai Qian, Bo Yuan [ISCA 2021] In Proceedings of the ACM/IEEE 48th Annual International Symposium on Computer Architecture, 2021 |

|

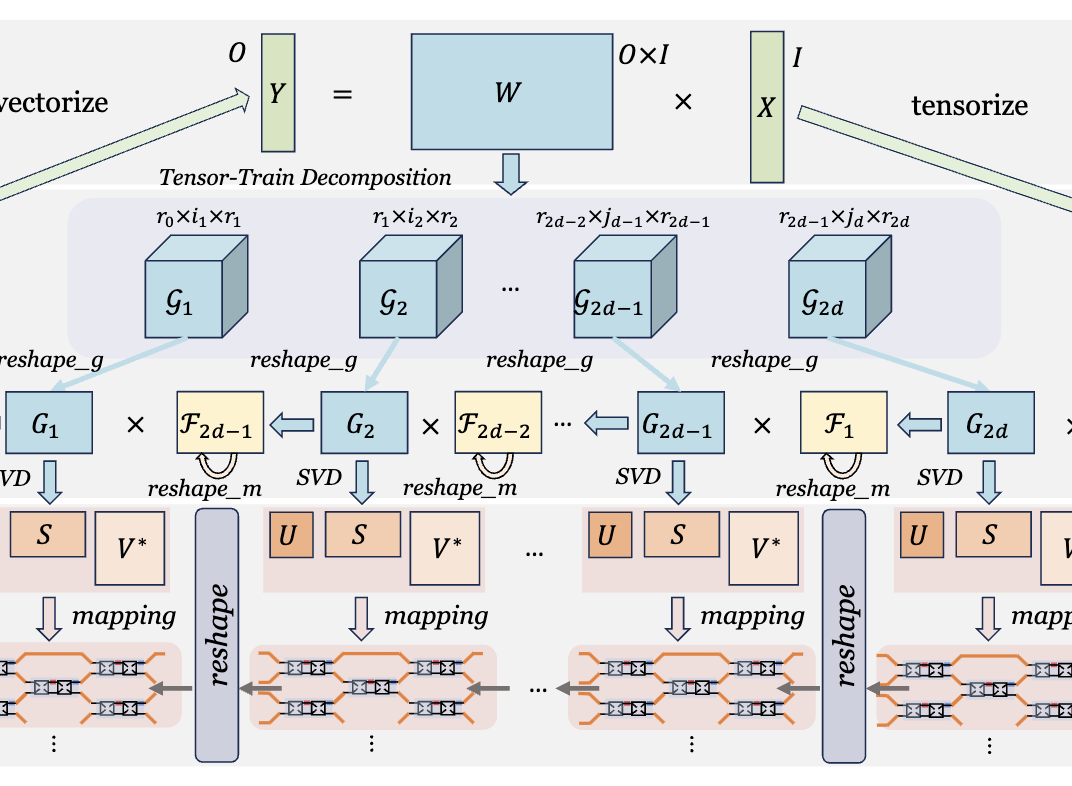

Miao Yin, Yang Sui, Siyu Liao, Bo Yuan [CVPR 2021] In Proceedings of The IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021 |

|

|

2018 |

|

Initial contributor: Yang Sui, Ruilong Liu, Jiaying Zhao, Wang Liu, Yonghui Li. [Baidu] The authors contributed almost equally to this work. Paddle-Lite Github (6.4k stars) Paddle-Lite is an updated version of Paddle-Mobile, an open-open source deep learning framework designed to make it easy to perform inference on mobile, embeded, and IoT devices. It is compatible with PaddlePaddle and pre-trained models from other sources, reported in NeurIPS Expo, Baidu Create, Wave Summit+. |

|

|

|

|

|

|

In addition to my academic work, I am a fan of Basketball, Soccer, Formula 1, Snooker. I love Tracy McGrady, Stephen Curry, Lionel Messi, PIS (YaphetS). I'm an experienced player of DOTA/DOTA2, World of Warcraft, Warcraft III, manipulating Druid (Balance Druid), DH (Havoc Demon Hunter) in WoW, and NE (Night Elf) in Warcraft III. I would like record some impressive moments:

I derive great pleasure from listening to the music that owns wonderful rhythm, especially R&B, and classical music from Chopin, Bach, Paganini. Kim Tae-yeon was my idol during high school, providing me with a strong example and encouragement during my most difficult and depressive times. Xiaolan (name is inspired from "Detective Conan"), my adorable grey and white cat with sparkling eyes, which appears in my NeurIPS'21 paper "CHIP", is playful, affectionate, and loves to cuddle. Say hi, Xiaolan! |

|

*Last updated on 09/2024*

|